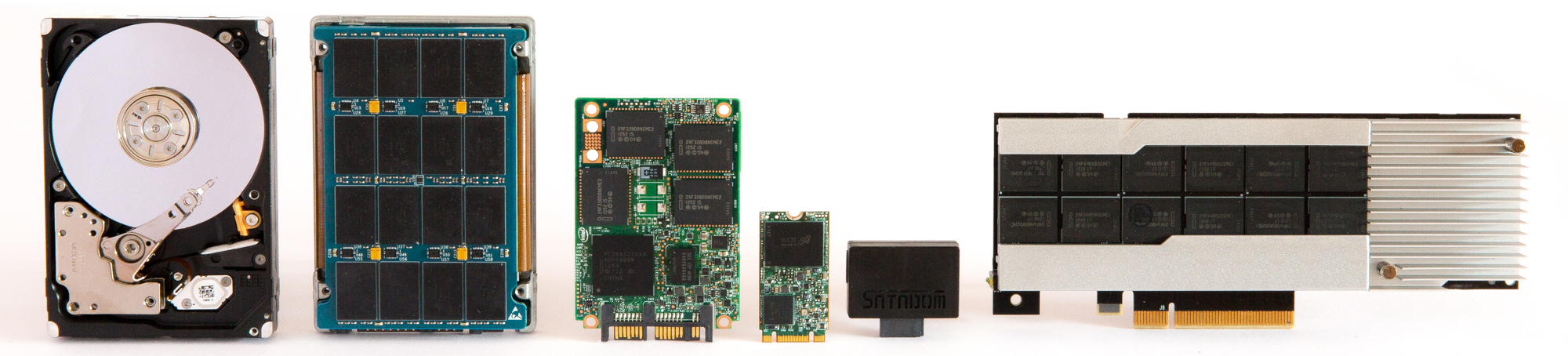

Technology storage media

Those brilliant creations of non-volatile memory on either spinning magnetic surfaces or on NAND flash in various grades or on any other persistent memory device, storage media in all their flavors did find their homeland inside the IT world.

Way back those days when Fritz Pfleumer, a German engineer in 1932 did elaborate some pre-liminary work to store data on magnetic substrates. This was the basic work for the legendary “Magnetophon K1” from company AEG.

Years passed by and lot s of inventions around magnetic storage media were made. In 1956 the very first Hard Disk Drive (HDD), legendary model 350 from company IBM was built into an IBM system called 305 RAMAC, ever since a triumphal procession was unleashed.

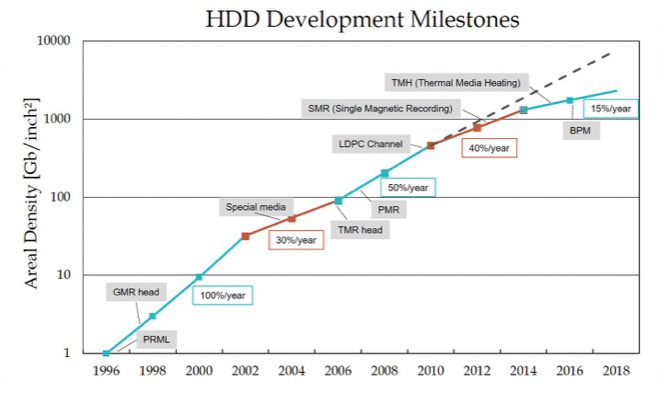

An innumerable number of inventions and enhancements hardware- and process related were made. To highlight a very few of those would be the discovery of the GMR effect by Peter Grünberg, a physicist who received in 2007 the Nobel Prize in the field of physics for this breaking ground discovery. He did show how the magnetic resistance varies with the direction of the influencing magnetic field. But not only the read head of a HDD, but also the disk surface of the spinning platters were improved for write- and readability. Around 1965 the media recording was done by a method called LMR (Longitudinal Media Recording), where the magnetization is in plane of the disk platters, whereas on the PMR (Perpendicular Media Recording) the magnetization is done in perpendicular direction to the spinning disks.

Over a longer period of time, the silicon chips specially for HDDs could not keep up with the pace of physical-chemical innovations. Nonetheless the development of a powerful data channel in the 90s, called the PRML channel (Partial Response Maximum Likelihood) is ranked as a significant milestone within the HDD history as well. The essentials of modern PRML channel are to be able to detect pretty poor data signals, do mathematical processing on them and interpret finally the “maximum likelihood” of the data content. The previous peak detect methodology was looking for quasi fixed data amplitudes instead.

As a general rule in the storage media market, each time a given technology was squeezed out, and all those manufacturing process sigmas were tighten up, the storage media industry came up with a new technology which paved the way for higher growth rate of the areal density.

As an example, around the Millennium, data heads as TMR (Tunneling MagnetoResistive) data heads were introduced, Seagate Technology was first in 2004 with it.

The idea here was to optimize the sensitivity of the magnetoresistance resistance, traditional physics is unable to explain the effect sufficient, it´s more a quantum mechanical phenomena where spin polarization under the Fermi-Dirac statistical distribution comes in play.

The number of those innovations and developments are sheer fascinating, so this article can´t list the full varity even near. Physical limitations were moved out, if platter signals got too weak, head media mechanical engineers did reduce fly height of the heads and when that ran into fly stability issues the robustness of the heads itself was enhanced via some carbon material for physical head protection. There were even designs out the market where the data head was designed to “grind” on the media permanently…..with limited success for market acceptance though.

Around the 80s, storage media industry came also up with a new category of no-moving-parts storage media, the NAND flash based Solid State Disks (SSDs). Having no spindle motor and no actuator aboard has clear advantages for longevity, but depending the product segment the I/O access profile may wear out the SSD rapidly….in some cases too rapidly.

Different grades of SSD design, such as SLC, MLC, eMLC and TLC do withstand a given customer application very differently.

Note:

SLC, Single Level Cell, 1 bit per cell

MLC, Multi Level Cell, 2 bits per cell and

TLC, Triple Level Cell, 3 bits per cell

SSD vendors did define different units of measurement, so TBW (TerraBytes Written) or DWPD (Drive Writes Per Day) tell the user, how many complete device writes the SSD can withstand, but clarity wasn’t always given.

Part of this was the missing standardization of the SSD ENDURANCE at the beginning, which basically can be thought in this circumstances as the wear out robustness of a SSD against a customer specific application.

In easy terms, as more random (instead of sequential) an application accesses a SSD, as more wear out of the NAND cells of the SSD device is generated, hence what the SSD really writes down the NAND dies vs what the host wanted to write is described with a Write Amplification Factor (WAF). As higher the WAF, as higher goes the SSD wear out as well.

Customers need to be cautions on the high colored tools of the vendors, some of those aren´t a real oil gauge to show rest of service life of the SSD.

SSD pricing was very elevated at the beginning, capacities were considerable low versus traditional HDDs. This has changed dramatically nowadays, a huge percentage of industrial storage capacity is covered by SSDs instead of HDDs already.

Paving factors are the increased I/O performance and the relatively low power consumption.

As seen for the HDD market around the Millennium time frame the competition contest is going on for SSD and will still hold for a while.

Segmentation of SSDs comes more and more into play, which SSD grade is okay for which customer application and the used lithography is very relevant for the device price point.

The exposure of very tiny storage structures (photolithography process) is a gating step within the design of a SSD. On structure sizes of 15 nm, 13nm or even smaller data can be stored, the used lithography is very essential for the manufacturing costs of an SSD.

Aside from SSDs, there was a high number of new designs for non-volatile data storage media designed and experimented with. As such ReRAM (Resistive Random-Access Memory) is a kind of best devices of 2 worlds: at the speed of DRAM but still non-volatile, the user data is stored.

If the voltage at a dielectric media reaches a unique value, the electrical resistance changes drastically. That enables the insulating layer to get conductive. Similar technologies are named FRAM, MRAM or PCM (Phase Chance Memory).

PCM relies on the electrical resistance: if the material´s atoms due have no regular repeating order, physics will call it amorphous. In this phase, the electrical resistance is elevated high. If there is a repeating structure order of the atoms, it´s called crystalline. The electrical resistance in this phase is pretty low.

That said, INTEL did show a technology called 3D XPoint (read 3D crosspoint), which also relies on the phase of the underlaying material (amorphous or crystalline, depending the Joule heating), so likewise it can be compared with PCM change memory, but much more complex in its material composition and functionality.

Another technology in that sense is called CBRAM (Conductive Bridging RAM). Ions dissolve in continued manner in an electrolyte material, depending the chemical adders, forming process might be very essential here, think of it as an controlled conductivity.

If one closely follows technology news, almost every day there are reports of new materials and processes for enhancements of either one of the above items.

Driving this report to closure, let´s visit a follow-on development bringing storage media as close to the CPU as possible, so PCIe SSDs or NV DIMM components can be plugged into DIMM ranks (JEDEC) to move storage media as close to the CPU as possible having direct access to the system main bus.

So author´s hope is, that this overview did at least alluded to some very remarkable discoveries and developments inside the storage media industry and TECODI team did underpin the unbelievable and unrivaled dynamics of such technology segment.

A high number of process oriented enhancements and optimization were excluded in here, but also these did contribute for such dynamical development of the data storage industry. Just to have it named, as for the Servo Track Writer Station (STWs) another report could be put together to describe that evolution as well.

All in all, such market subjects to a very impressive evolutionary, sometimes even disruptive process. The flip-side of it is, the mostly underestimated technical risk associated with it due to the missing the long-term experience as it is present for traditional HDDs.

TECODI does understand itself as a mediator between the technical challenge on the one hand and the cost pressure on the other. There is a difference between cheap and inexpensive, storage media offers can be both a lot of it depends on the customer application and there are many cases where a storage solution is well overdesigned and too expensive. As an example, the first SSDs came out with SLC NAND flash with 10 DWPD even for the highest capacity points.

Meanwhile it turned out, that this is for most of the applications too much margin, costs a lot and does not buy anything in extra. In most cases the different storage media grades being practicable, are flowing.

TECODI people were part of such industry since late 80s and are diving the dynamics since. Result is a broadband and background knowledge, very settled and detailed, which helps for all those technologies to understand their consequences for system integrations.

It remains imperative to understand the technology roadmap of the storage media industry and understand the intercepts with system roadmaps at the same time in order to enable solutions as needed.

All those described storage technologies have one in common:

Each new technology has it‘s sets of challenges which needs to be managed under real customer applications.

TECODI-started from the very beginning.